Concepts

Tables

Tables represent the location and, optionally, the column types of a SQL Database table. They are used in most Astro SDK tasks and decorators.

There are two types of tables:

Persistent Table

These are tables that are of some importance to users and will we persist in a database even after a DAG run is finished and won’t be deleted by When to use the cleanup operator. Users can still drop them by using

drop_table operator(to be replaced with ref). You can create these tables by passing in anameparameter while creating aastro.sql.table.Tableobject.output_table=Table(name="expected_table_from_s3", conn_id="postgres_conn"),

Temporary Tables

It is a common pattern to create intermediate tables during a workflow that don’t need to be persisted afterwards. To accomplish this, users can use Temporary Tables in conjunction with the When to use the cleanup operator task.

There are two approaches to create temporary tables:

Explicit: instantiate a

astro.sql.table.Tableusing the argument temp=TrueImplicit: instantiate a

astro.sql.table.Tablewithout giving it a name, and without specifying the temp argumentoutput_table=Table( conn_id="postgres_conn", ),

How load_file Works

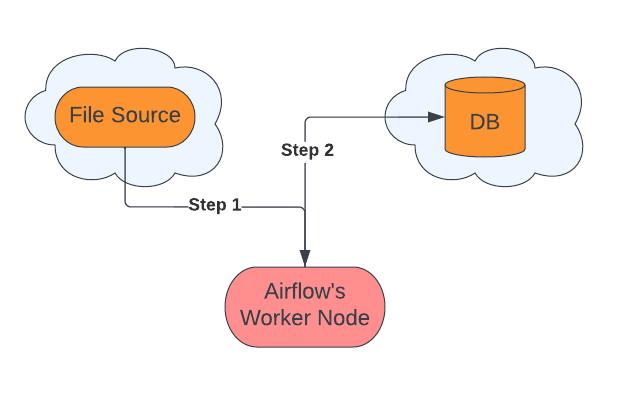

When we load a file located in cloud storage to a cloud database, internally the steps involved are:

Steps:

Get the file data in chunks from file storage to the worker node.

Send data to the cloud database from the worker node.

This is the default way of loading data into a table. There are performance bottlenecks because of limitations of memory, processing power, and internet bandwidth of worker node.

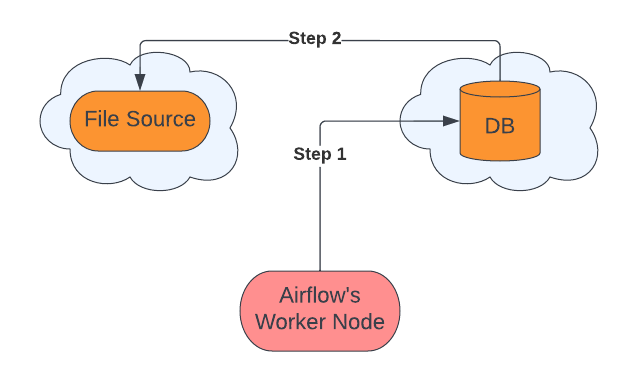

Improving bottlenecks by using native transfer

Some of the cloud databases like Bigquery and Snowflake support native transfer (complete list of supported native transfers Supported native transfers) to ingest data from cloud storage directly. Using this we can ingest data much quicker and without any involvement of the worker node.

Steps:

Request destination database to ingest data from the file source.

Database request file source for data.

This is a faster way for datasets of larger size as there is only one network call involved and usually the bandwidth between vendors is high. Also, there is no requirement for memory/processing power of the worker node, since data never gets on the node. There is significant performance improvement due to native transfers as evident from benchmarking results.

Note - By default the native transfer is enabled and will be used if the source and destination support it, this behavior can be altered by the use_native_support param.

Templating

Templating is a powerful concept in Airflow to pass dynamic information into task instances at runtime. Templating in Airflow works exactly the same as templating with Jinja in Python: define your to-be-evaluated code between double curly braces, and the expression will be evaluated at runtime.

The parameter list passed to the decorated function is also added to the context which is used to render template. For example:

@aql.transform

def union_top_and_last(first_table: Table, second_table: Table):

"""Union `first_table` and `second_table` tables to create a simple dataset."""

return """

SELECT Title, Rating from {{first_table}}

UNION

SELECT Title, Rating from {{second_table}};

"""

More details can be found at airflow templates reference